One of my first SwordSTEM articles was Is It Important To Weigh Target Values?. In this article I tried to correlate a fighter’s win percentage with their preference for scoring high or low value points. In the end it showed that there wasn’t really a correlation between fighters who hit higher value targets and those who won more.

The data was based on 10 events which had a large number of overlapping participants. But times have changed. I have some better ideas on how to test this, and a much, much bigger data set to work with.

A New Adventure

My new approach is to see how many matches would be overturned by flat scoring vs how many would retain the same winners. The methodology was simple:

- Look up how many times each fighter hit each other.

- Determine who got more hits in.

- Compare that against the actual match winner.

Which is all well and good, but how do I know which tournaments to select?

The advantage of working with the 10 events in the first article was that they all had similar rules, allowing them to be treated as the same. Now I am looking at a database of 128 events (541 tournaments), which means that there is a ton of variation.

It is important to filter out tournaments which don’t use weighted scoring, as you are going to see 100% correlation (+ table error) between the number of times hit and the match winners. My first thought was to use the highest point value seen in the tournament. But I know for a fact that scorekeepers make mistakes, and sometimes organizers add extra exchanges to correct things. A tournament with a few hundred 1-point exchanges, and a single 3 point exchange, is still a non-weighted tournament . And thus I moved to looking at the average number of points awarded in a tournament.

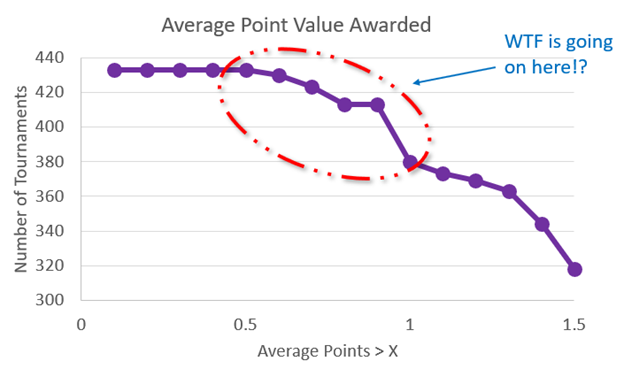

But what should I set the threshold at? I decided to sweep through and see at what point the number of tournaments drops off, and…

Why am I seeing about 50 tournaments with an average point value (on scoring exchanges) of less than 1 point? Turns out it is because there are several full afterblow tournaments which are not recording their exchanges correctly. Instead of inputting the proper point values they enter 0 points awarded when both fighters score the same value. So I try again only looking at clean hits, and things look much better.

You Haven’t Told Us Anything Useful Yet

Indeed.

Now that I know how I’m going to get my dataset, it’s time to mine some data.

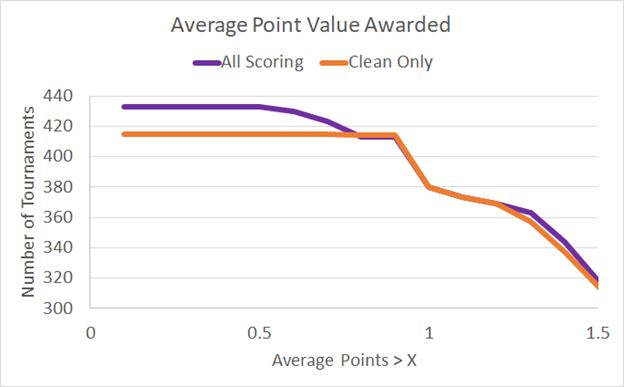

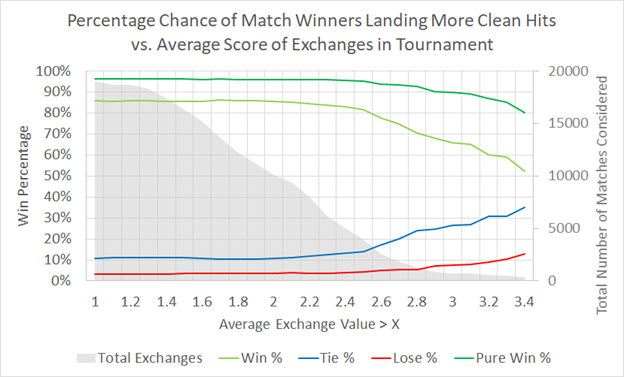

Bam. Looks like there is very little change if we remove weighted scoring. If you disallow ties then you will get the same result 96% of the time. But does it matter how much you are weighing your tournaments? Surely a tournament which awards points from 1-4 will be more likely to overturn results than a tournament which only gives 1-2 points?

Effect of Point Spread

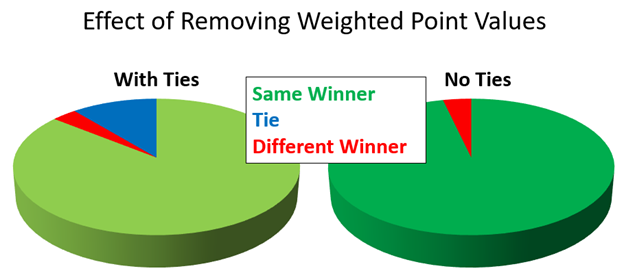

So we repeat the same experiment, while varying the threshold of which tournaments we include.

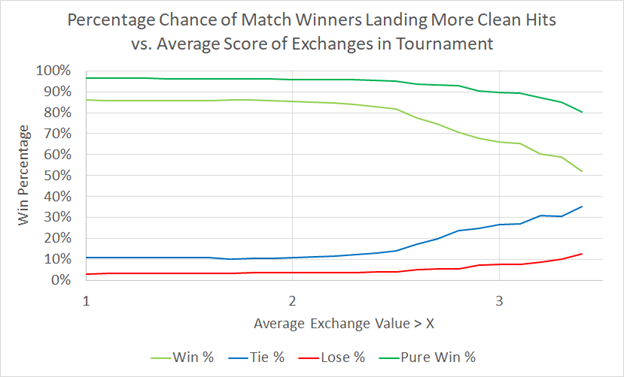

Everything seems somewhat stable until we get to an average exchange value of greater than 2.5. After that the weighting seems to become significantly more important. Or does it?

Around 2.5 points per exchange is the region where we get into really small sample sizes. With a few tournaments dominating the narrative the numbers certainly can’t be taken to be indicative of an overall trend. So the original observations stand.

Sanity Check

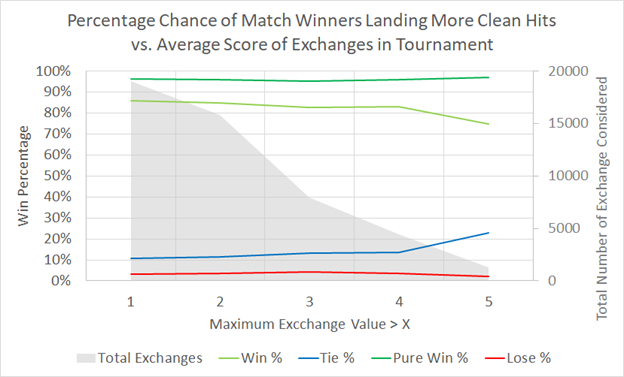

Earlier I said that I didn’t want to use the maximum points awarded in a single exchange as a criteria to determine if a tournament was weighted or unweighted scoring. But we can use it as a point of comparison with this data, to make sure it is all sensible.

And it looks about the same. After 4 points we are getting into small sample size territory, and we start to see the number of ties increase as a result of our point flattening changes. But overall the same numbers check out.

Why Weight?

This data shows fairly conclusively that weighted scoring really doesn’t affect the outcome of the match very much. Nineteen times out of twenty you will end up with the same overall result.

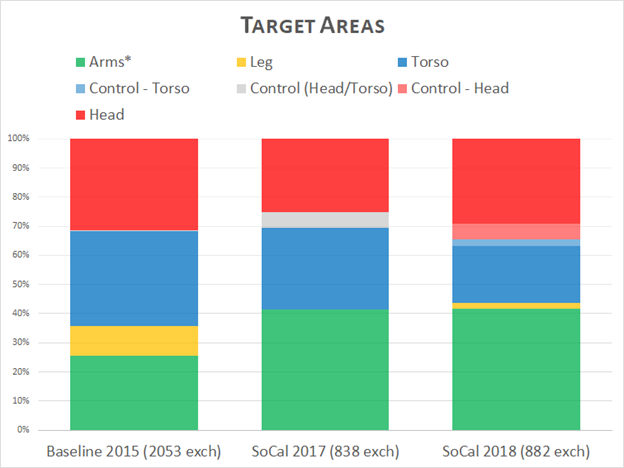

But is that even important? Even if the weighting doesn’t affect the match outcome it can still have significant impact on participant behavior. Looking back to Data Mining – SoCal Swordfight Longsword we can see significant changes in the targeting when point values were changed year over year. And this is minor, shuffling the point values within a fairly stable ruleset. Going from tournament to tournament I would expect to see even more variation (as soon as I figure out a good way to parse and wrap my head around it).

Because of its ability to change participant behavior, I still strongly advocate having weighted targets. But it has become very obvious by now that better fencers will get it done regardless of how you award the points.

Stuff for Nerds

Data dumps!

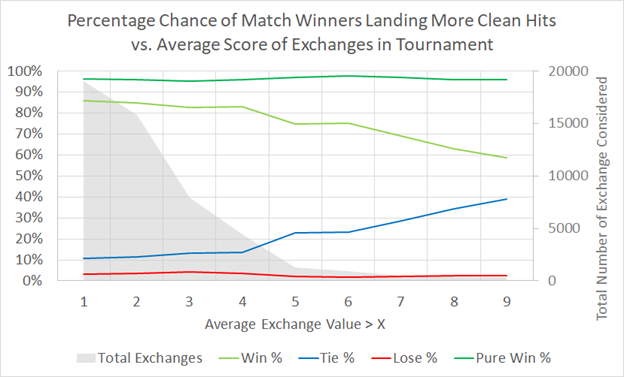

What happens if I look at tournaments with really big score discrepancies.

Number of Eligible Tournaments:

| Average Point Theshold | All Scoring Exchanges | Clean Exchanges Only |

| 0.1 | 433 | 415 |

| 0.2 | 433 | 415 |

| 0.3 | 433 | 415 |

| 0.4 | 433 | 415 |

| 0.5 | 433 | 415 |

| 0.6 | 430 | 415 |

| 0.7 | 423 | 415 |

| 0.8 | 413 | 414 |

| 0.9 | 413 | 414 |

| 1 | 380 | 380 |

| 1.1 | 373 | 373 |

| 1.2 | 369 | 369 |

| 1.3 | 363 | 357 |

| 1.4 | 344 | 337 |

| 1.5 | 318 | 314 |

| 1.6 | 295 | 293 |

| 1.7 | 267 | 262 |

| 1.8 | 241 | 238 |

| 1.9 | 222 | 219 |

| 2 | 206 | 199 |

| 2.1 | 193 | 185 |

| 2.2 | 163 | 159 |

| 2.3 | 139 | 129 |

| 2.4 | 111 | 106 |

| 2.5 | 86 | 86 |

| 2.6 | 59 | 62 |

| 2.7 | 40 | 48 |

| 2.8 | 32 | 32 |

| 2.9 | 19 | 22 |

| 3 | 15 | 17 |

| 3.1 | 12 | 16 |

| 3.2 | 11 | 13 |

| 3.3 | 8 | 11 |

| 3.4 | 8 | 8 |

| 3.5 | 5 | 4 |

| 3.6 | 5 | 4 |

| 3.7 | 4 | 4 |

| 3.8 | 4 | 4 |

| 3.9 | 4 | 4 |

| 4 | 4 | 4 |

| 4.1 | 4 | 4 |

| 4.2 | 3 | 3 |

| 4.3 | 3 | 3 |

| 4.4 | 2 | 2 |

| 4.5 | 2 | 2 |

| 4.6 | 2 | 2 |

| 4.7 | 2 | 2 |

| 4.8 | 2 | 2 |

| 4.9 | 2 | 2 |

| 5 | 2 | 2 |

| 5.1 | 2 | 2 |

| 5.2 | 2 | 2 |

| 5.3 | 2 | 2 |

| 5.4 | 2 | 2 |

| 5.5 | 2 | 2 |

| 5.6 | 2 | 2 |

| 5.7 | 2 | 2 |

| 5.8 | 2 | 2 |

| 5.9 | 2 | 2 |

| 6 | 2 | 2 |

| 6.1 | 2 | 2 |

| 6.2 | 2 | 2 |

| 6.3 | 1 | 1 |

| 6.4 | 0 | 1 |

| 6.5 | 0 | 0 |

| 6.6 | 0 | 0 |

| 6.7 | 0 | 0 |

| 6.8 | 0 | 0 |

| 6.9 | 0 | 0 |

| 7 | 0 | 0 |

Number of Matches Affected By Flat Scoring

| Average Score Value | No Change | Tie | Different Result |

| 1 | 16398 | 602 | 2071 |

| 1.1 | 16032 | 602 | 2067 |

| 1.2 | 15999 | 602 | 2053 |

| 1.3 | 15723 | 596 | 2004 |

| 1.4 | 14926 | 575 | 1922 |

| 1.5 | 13961 | 555 | 1789 |

| 1.6 | 13025 | 533 | 1650 |

| 1.7 | 11816 | 470 | 1412 |

| 1.8 | 10522 | 439 | 1279 |

| 1.9 | 9605 | 404 | 1175 |

| 2 | 8649 | 376 | 1096 |

| 2.1 | 7961 | 351 | 1037 |

| 2.2 | 6781 | 296 | 940 |

| 2.3 | 5147 | 226 | 761 |

| 2.4 | 4134 | 197 | 654 |

| 2.5 | 3198 | 166 | 552 |

| 2.6 | 2016 | 134 | 449 |

| 2.7 | 1379 | 99 | 369 |

| 2.8 | 883 | 68 | 299 |

| 2.9 | 577 | 62 | 210 |

| 3 | 479 | 55 | 193 |

| 3.1 | 459 | 55 | 190 |

| 3.2 | 353 | 52 | 181 |

| 3.3 | 291 | 51 | 151 |

| 3.4 | 199 | 49 | 134 |

Number of Matches Affected By Flat Scoring

| Max Score Value Greater Than | No Change | Tie | Different Result |

| 1 | 16398 | 602 | 2071 |

| 2 | 13464 | 563 | 1824 |

| 3 | 6571 | 337 | 1041 |

| 4 | 3692 | 163 | 597 |

| 5 | 946 | 29 | 292 |

| 6 | 696 | 17 | 214 |

| 7 | 358 | 11 | 149 |

| 8 | 248 | 10 | 136 |

| 9 | 197 | 8 | 131 |